The basics at a glance

To understand Google’s universe’s essential functions at all, there are some basic terms that you should know.

Crawler, Spider, Robot, or whatever you want to call it

A (web) crawler is practically a synonym for the Spider, robot, bot, etc. It analyzes and indexes data from the Internet. This means that it looks at your website, reads the content there, and then saves information about it. Also, pages that have already been indexed are checked for updates and new features. Through this process, w grounded information was targeted only for users to receive relevant content on search queries. The crawler is, therefore, the basis of every search engine and indispensable. For this reason, a bot, Spider, etc., is always essential if you want to optimize your website for search engines.

However, a single bot is not always used to search the Internet. Google uses several of them, for example, one only for images. The bots, therefore, have a transparent thematic allocation according to which they are subdivided.

This is how the indexing process works.

In principle, you can imagine the crawler like a spider – that’s why Spider is one of its innumerable names. Like this, a bot “crawls” or “crawls” along the net’s threads. Of course, lines are just a metaphor here. In principle, however, the Internet works similarly: internal and external links connect individual websites like an extensive network.

Thus, the Spider follows the individual links on the websites and therefore always finds other websites and their sub-pages. Also, sitemaps can be submitted by webmasters in the Google Search Console, which are also carefully examined by the crawler. You then help it to recognize what should end up in the index. As a result of these measures, more and more content is being indexed and combined into a continuously growing content library. All of this content is then made available to searchers on the Internet. The search engine algorithm then searches this index when you enter a search term to provide you with the appropriate answer.

This is how you show the crawler what should and should not be indexed.

You can help the crawler find websites. For this purpose, internal links are on both sides and from the site navigation to various sub-pages particularly relevant. This is how you manage to make these pages visible to the crawler since the bot can follow the links. It is also useful to submit a sitemap, as it is a better way of submitting updates to your content or new content to Google. Regular publications on your website are an essential signal to Google and convey that your page should be crawled at continuous intervals.

Of course, you can also tell the Googlebot which content it does not need to look at or which it does not need to index. To exclude pages from the index, you can mark hyperlinks to the related content with the noindex attribute. If you want to refine the whole thing, you can work with a robots.txt file. This is stored in the root directory of a domain and determines which the crawler can visit URLs. Here you can expressly exclude the crawler from some regions of the page using a disallow statement.

Limits of crawlers

Of course, there are also moments when web crawlers reach their limits. This is the case, for example, in the deep or dark web. Contents are specially placed here not to be found quickly and should remain invisible to the average Internet user.

The algorithm, PageRank, ranking systems – what is it?

After the crawler has searched and found various pages on the Internet, the algorithms are used. These are used for the weighting and evaluation of the individual websites. They practically do the pre-sorting so that, in the end, you get the right and relevant answer to your search query. The various ranking systems of a search engine search billions of websites in the index thanks to the crawler. You will receive the answer to your question in seconds.

History of the algorithm

The algorithm was born at the end of the 90s, under the name PageRank algorithm. Google founders Larry Page and Sergey Brin invented it. It should form the basis for the success of the search engine. The aim was to search the Internet for websites so that particular pages are displayed in response to search queries. In the beginning, however, the weighting was only done based on the link structure – that is, the higher the number of external links, the more important the page and the higher the rating. The ranking in the SERPs was determined based on this weighting.

This is how the algorithm continues to develop today.

In the meantime, there are, of course, a multitude of factors that influence the ranking of a page. Links are just one of many. The problem is: For a search query, there are often several million possible pages on the Internet that provide the corresponding answer. Therefore, the algorithm must evaluate each page to select the one that exactly suits the user. It’s a too complicated process. There are several hundred of these so-called ranking factors that are used to analyze a website. Most of them are not even officially known. However, the individual elements can be roughly divided into different assessment areas in which other criteria are then considered.

Which factors does the Google algorithm weight?

There are very different areas that the algorithm analyzes to offer you the best possible result. These include:

- Content – It is clear here: Quality> Quantity. High-quality content will convince and bind your website visitors.

- Links – External and internal links from relevant and suitable pages give your users added value. Links from relevant sources are therefore essential.

- User Experience – If your site is not easy and intuitive to use, your stay on your site will be relatively short. Therefore, make sure that your visitors can easily interact with the website.

- Social Signals – The signals that the search engine receives from social media are becoming increasingly important. Here, too, the focus is on high-quality content that users like to like and share. Such interactions send positive signals to the search engine and improve the ranking.

- Brand – A well-established and well-positioned brand will help you achieve better rankings. So work on your brand image and brand awareness.

- Technology – The technical foundation of a website should always be impeccable. However, if there are errors or problems somewhere, it is important to correct them, as otherwise, this can hurt your positioning in the SERPs.

These criteria are part of the process that a search algorithm goes through to evaluate various content’s relevance and quality. Of course, there are countless other sub-areas for each category, which are also carefully analyzed. To adapt your page accordingly, there are publicly visible guidelines from Google, which you can use to orient yourself.

What are the key factors that determine what results in you will get?

According to Google, various factors influence which results are shown to you in the SERPs. The following five main categories, which build on each other step by step, are essential:

Word analysis

Semantic relationships are now significant when it comes to search queries. The search engine algorithm analyzes the context and meaning of the entered words to achieve the best possible result. There are various language models for this, which are intended to help decipher the relationships. This word analysis is then used to find the perfect and most relevant match in the index.

Word analysis does not only include understanding the context. Instead, it is also about classifying search queries thematically to make the corresponding answer even more relevant. If a search contains critical words such as “opening times,” the search engine knows directly that the user does not want to receive any results on products or the company’s history but wants to know precisely when the store opens and closes. If the search query contains the word “images,” the searcher is directed to Google Images. Also, the algorithm analyzes the content to determine whether it is up-to-date to not show you any outdated and possibly no longer relevant content.

Matching the search term

If the word or phrase’s meaning is clear, pages that match the (search) question are searched for. Therefore, the search index is searched here to find relevant websites. Special attention is paid to keywords that match the search query. For this reason, various parts of the text, such as titles, headings, and even entire text passages, are then examined. Once this keyword matching check has been carried out, the last interaction data is reviewed to analyze how users have received the result so far and whether it was helpful. In this way, inquiries can be answered with even more relevant results.

Since the algorithm is a machine-learning system, it can conclude previous data and signals and use them for future inquiries. The algorithm looks at all search queries objectively without interpreting anything into them. Therefore, Google does not consider subjective attitudes, intentions, or points of view when comparing the search term.

Ranking of useful pages

This section of the critical factors includes the ranking factors, which influence which results are considered acceptable and then get better positioning in the search results. In addition to the user-friendliness of the page, and optimized loading speed, the quality of the content, etc., links are an important signal for the search engine.

However, caution is required here! Not every link has a positive effect. Make sure that your page is not referred to by spam websites. Often, these websites aim to deceive or mislead the search engine. Spam and the machinations that go with it are something that Google doesn’t like to see. For this reason, penalties and negative consequences are more likely in such a case than an improvement in your ranking. To always go the correct way and not run the risk of causing adverse effects for your website, you should orient yourself to the guidelines for webmasters from Google.

The best results

The next step is to analyze whether the information matches the search query. Correctly understood: The entire previous process runs in the background in a matter of seconds – so you still have no result on your screen.

Often there is an infinite number of results that match a search query. So which are the ones that will ultimately be played out to the user? For this, a wide variety of information and content from the index is analyzed, and the most suitable of them is then played out to you. This works because the individual ranking factors are continually being adapted to the constantly evolving web. This enables even better search results to be delivered. For example, the following things are examined:

- Which browser do users surf through?

- Which end devices are used?

- On which display sizes are the results shown?

- What are the page load times?

- How is the internet connection?

Contextual reference

In the last step, before you are finally presented with the search result, the personal context is examined. For this, things that Google knows about you so far are included. These include, for example

- Your current location

- Previous searches

- Search settings, and so on.

Above all, the location is an essential factor, which sometimes leads to entirely different results being displayed for the same keyword.

In some cases, your previous search history is also relevant for a search query. Here, Google interprets interests in past searches and uses this knowledge to present you with results that are even better tailored to you in the future. However, if you do not want Google to use this, you can use your Google account to specify which data may optimize the search results.

In summary: what happens with search queries?

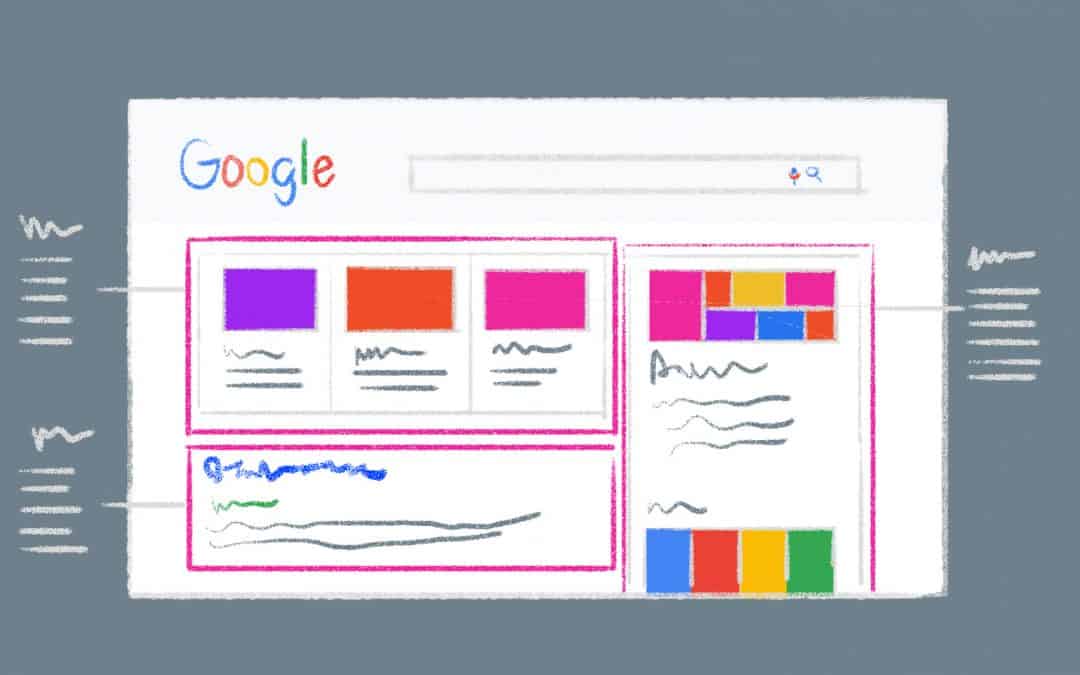

It all starts with typing a search query into the Google search field. Immediately afterward, the invisible process starts looking for the perfect match for your question. Within a short time (often less than a second), the algorithm searches the search index, a kind of library with all the content on the Internet that has been crawled so far. This “library” has been sorted and arranged long before your search, making the algorithm’s work much more comfortable. After he has understood your request’s semantic context in the background, compared search terms, and viewed the rankings of the most relevant pages, he is looking for you suitable result. He then includes important personal background information that puts the request in the right context. Once this entire process is over, you will receive the product you can see with the most helpful and relevant answers.

What are Google algorithm updates, and how often do they occur?

According to speculation, the Google algorithm should be changed or optimized around 500 to 600 times a year. In most cases, this is done tacitly and clandestinely. Often this is because there is continuous improvement, and therefore often only minor changes are made. Nevertheless, there are often cases in which a Google update is consciously communicated officially. This is usually when many webmasters are affected, or there are considerable changes to the algorithm that can significantly impact the rankings. This is, for example, with the Core Web Vitalsthe case, which is inserted as a new ranking factor. Although these should only become relevant in 2021, Google announced them in mid-2020.

Also, there are always adjustments to technical innovations, such as smartphones, voice search, etc., which are incorporated into the Google algorithm. Continuous improvement is essential to achieve the best possible results still and meet the user’s requests.

Google algorithm and search engine optimization

These updates keep SEOs around the world regularly on their toes. In addition to the already numerous ranking factors that have to be considered in the search engine optimization process, new ones are continually being added. But even worse: adjustments that have already been made may become irrelevant out of nowhere.

SEO aims to understand the algorithm in such a way that adjustments can be made to websites that then lead to top rankings in the search results . For this purpose, OnPage and OffPage optimizations are carried out. Both contain countless variables that affect the performance and visibility of a website. It is also essential to use the right keyword when creating and updating website content – a keyword analysis will help you. If you manage to tailor your website and its content precisely to the algorithm through search engine optimization, you will achieve a better ranking.

Short digression: Why are there different search results on desktop and mobile?

Sometimes it happens: At the same search on Google, you will get different results. Often it is due to the personal adjustments that Google makes to every search query. Also, there can be various causes:

- The location you are currently at

- Varying search results due to a search query on different servers

- Use of a Google page that corresponds to your language but belongs to a different country (google.de vs. google.ch or google. at)

- Use of different browsers or operating systems

- Influence of previous searches

- Influence of the web protocol or previously visited pages.

Also, mobile and desktop search differ significantly. This is because Google has its search index for mobile devices: the so-called Mobile-First Index. In it, Google collects information about mobile versions of websites, which it then calls up when a search query is made on a mobile device. As part of the “mobile revolution,” the search engine giant wants to build that more smartphones and tablets will tend to be used than desktops in the future.

Conclusion: That’s why the Google algorithm makes us happy

Of course, the Google algorithm is not alone. Every search engine has a similar tool to answer users’ search queries in the best possible way. For example, Yahoo’s algorithm is called Slurp. All of these systems are complex processes that often turn the SEO world upside down through regular updates. Nevertheless, search engines have long been an integral part of our lives and have become indispensable. “Googling” something has long been established in our vocabulary, and it is seldom that knowledge has been obtained more quickly and efficiently than through search engines. Google and Co. make life easier for us and the absorption of experience and all of that, thanks to crawlers and algorithms.